| |

Tunnel Vision

22 June 2012 • Ype Wijnia

asset management strategie, risk management, policy development, planning, program management, manage changes

The past years we have had several accounts of people being sentenced severely for crimes they did not commit. Examples in the Netherlands are the Putten killings, the murder in Schiedam, Lucia de B and recently the case in Breda. In all cases the prosecutors were guilty of tunnel vision. They were so convinced they had caught the right people that they did not consider the idea that the suspects may be not guilty. From a personal viewpoint, it can be understood. There are no eyewitnesses or video surveillances so the detectives have to rely on indirect evidence. If a theory is developed on who did it, one is generally relieved there is a suspect. If the suspect cannot prove he is not guilty (who has an alibi for every moment of the day?) or is simply not believed (because the alibi is doubted on details, or the suspect confessed under pressure earlier) than the suspect hangs, though that is not literal in the Netherlands fortunately. But there is a major problem. Eyewitness reports are notoriously unreliable. People have a very bad memory for facts (see the work of late professor W.A. Wagenaar[1]) and memories can be planted by others. And regarding confessions: using the right approach one can make anybody confess anything and even make the person believe his own confession. This is precisely the reason suspects cannot be convicted based on their own confession alone. However, tunnel vision becomes malicious if the prosecutors keep evidence that the suspect is not guilty to themselves. Then it becomes a prosecutor wanting to establish himself as a crime fighter even though it is known the suspect is not guilty and picks the unlucky person that happened to be on the wrong place on the wrong time. Could a similar thing happen in asset management? The answer is yes, and it is possible all over the range from strategic to operational levels.

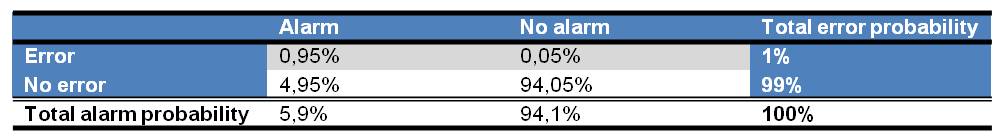

A classical example of tunnel vision is the near meltdown of the nuclear power plant of Harrisburg in 1979, as pictured in the Normal Accident Theory of Charles Perrow[2]. The control room of a power plant contains many meters and alarms. Given that a nuclear power plant is a high risk asset, that is what one should expect. However, every device can fail, and this applies to meters and alarms as well. Even at the level of individual appliances this can result in unexpected outcomes. Suppose an event has a probability of occurrence of 1%, but the alarm of indicating the event happened has a reliability of 95%. That reliability applies both on detecting the error, as on indicating the normal situation. The result is given in the truth table below.

The table shows that there is a probability of 5,9% of having an alarm, whereas only one in 6 alarms indicates a true error. The percentages in a nuclear power plant may be different, but every operator knows that alarms can be false. If alarms sound very often, you simply stop responding to them. The operator presumes they are incorrect and interprets everything else according to this presumption. If the alarm proves to be true, major accidents can happen. And this seems to have been the case in Harrisburg.

On a tactical level a similar problem can occur. Suppose an asset engineer has to investigate a certain failure. And suppose the engineer has investigated similar problems shortly before, in which the same component of the asset failed, for example the oil pump. If the new failure is on the same type of asset, it is quite normal to judge the new failure along the same mental model. In other words, the first idea will be that the oil pump failed. If that proves to be the case, it is easy to jump to the conclusion that it is time for a large scale revision program of the oil pumps. But this may neglect the fact that the breakdown of the oil pump is the result of the failure, and not the cause. An improper analysis could the result in unnecessary costs.

The strategic level is also prone to tunnel vision. Perhaps the most famous example is the millennium bug. In short, at the end of the last century people discovered that many computer systems used only 2 digits to indicate the year. If 1999 would become 2000, those digits would go from 99 to 00, which could pose a problem as 00 is smaller than 99 and not larger. Problems foreseen were the collapse of the financial system, outage of utilizes (power, gas, water), and even if they could be restarted, many devices would not work anymore because they would be outside the maintenance interval. In preparation for the centennial party, large amounts were spent on expanding the year field in computer systems with two digits, developing emergency scenario’s. Everybody was in the highest state of emergency on New Year’s eve 1999. Any disaster junkie would be glued to the television, as the world would slowly go dark starting at noon in the Christmas islands, followed by New Zealand, Australia, Japan, China, India, Russia and so on. But nothing happened, nowhere. In hindsight the millennium bug prophets claimed that was because of the large amounts spent on solving the problem, but that is utter nonsense. It would be the first time in the human history that a plan had been worked out to a level of perfection, and none of the billions of opportunities of things to go wrong was overlooked. That is of course in itself not impossible, but how likely would it be? The best guess is that it is much more likely that the problem did not exist in the first place. Many of the devices that should fail at the end of the millennium did not have a clock or calendar on board to be influenced by the problem.

One would expect that over time people would start to realize that the expenditure made on solving the millennium problem was perhaps a little bit of an overreaction, but no. Up until today, there are people out there that claim that the risk of the millennium bug was to large to accept, even if the probability of the problem not actually occurring would be very large. This is the precautionary principle. In some cases it can be an argument to do something. However, in this case the argument was only brought forward after the expenditure, and not beforehand. Beforehand, the message was that the problem certainly would (and not could) occur. That sounds like locking somebody in first and only then think about a reason. And if that reason cannot be found, you just claim it is to prevent the person to commit a crime in future, a precautionary measure so to say.

Ype Wijnia is partner at AssetResolutions BV, a company he co-founded with John de Croon. In turn, they give their vision on an aspect of asset management in a weekly column. The columns are published on the website of AssetResolutions, http://www.assetresolutions.nl/en/column

[2] Charles Perrow, Normal accidents: living with high risk technology, 1984

<< back to overview

|